Call to Run the Algorhythm Again

Yard-means Clustering: Algorithm, Applications, Evaluation Methods, and Drawbacks

Clustering

Clustering is one of the most common exploratory data analysis technique used to go an intuition about the structure of the data. It tin be defined as the task of identifying subgroups in the information such that data points in the same subgroup (cluster) are very similar while data points in different clusters are very different. In other words, we endeavour to find homogeneous subgroups within the data such that data points in each cluster are as like as possible according to a similarity measure such as euclidean-based altitude or correlation-based distance. The decision of which similarity mensurate to use is awarding-specific.

Clustering analysis tin can exist done on the ground of features where we attempt to observe subgroups of samples based on features or on the basis of samples where we attempt to find subgroups of features based on samples. We'll cover here clustering based on features. Clustering is used in market segmentation; where we try to detect customers that are similar to each other whether in terms of behaviors or attributes, epitome segmentation/compression; where we try to group similar regions together, document clustering based on topics, etc.

Unlike supervised learning, clustering is considered an unsupervised learning method since we don't accept the footing truth to compare the output of the clustering algorithm to the true labels to evaluate its performance. We merely desire to effort to investigate the structure of the data past grouping the data points into distinct subgroups.

In this post, we volition cover only Kmeans which is considered as i of the near used clustering algorithms due to its simplicity.

Kmeans Algorithm

Kmeans algorithm is an iterative algorithm that tries to partition the dataset into Kpre-defined distinct non-overlapping subgroups (clusters) where each data point belongs to simply i group. It tries to make the intra-cluster information points as similar as possible while also keeping the clusters as different (far) as possible. It assigns data points to a cluster such that the sum of the squared distance between the information points and the cluster's centroid (arithmetic mean of all the data points that vest to that cluster) is at the minimum. The less variation we accept within clusters, the more than homogeneous (similar) the data points are within the same cluster.

The way kmeans algorithm works is as follows:

- Specify number of clusters Thousand.

- Initialize centroids by outset shuffling the dataset and then randomly selecting K information points for the centroids without replacement.

- Keep iterating until there is no change to the centroids. i.e assignment of data points to clusters isn't changing.

- Compute the sum of the squared altitude between data points and all centroids.

- Assign each data point to the closest cluster (centroid).

- Compute the centroids for the clusters by taking the average of the all data points that belong to each cluster.

The approach kmeans follows to solve the trouble is called Expectation-Maximization. The E-step is assigning the data points to the closest cluster. The 1000-footstep is calculating the centroid of each cluster. Below is a break downwardly of how we can solve it mathematically (experience gratis to skip it).

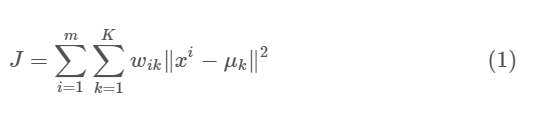

The objective role is:

where wik=i for data point xi if it belongs to cluster thousand; otherwise, wik=0. Besides, μk is the centroid of eleven'due south cluster.

Information technology's a minimization trouble of 2 parts. Nosotros first minimize J west.r.t. wik and care for μk fixed. And then we minimize J west.r.t. μk and treat wik stock-still. Technically speaking, we differentiate J due west.r.t. wik starting time and update cluster assignments (E-stride). So we differentiate J due west.r.t. μk and recompute the centroids afterward the cluster assignments from previous step (M-step). Therefore, E-footstep is:

In other words, assign the data point 11 to the closest cluster judged by its sum of squared distance from cluster's centroid.

And One thousand-stride is:

Which translates to recomputing the centroid of each cluster to reflect the new assignments.

Few things to note hither:

- Since clustering algorithms including kmeans use distance-based measurements to decide the similarity between information points, information technology'south recommended to standardize the data to take a hateful of cipher and a standard deviation of one since almost e'er the features in any dataset would have different units of measurements such every bit age vs income.

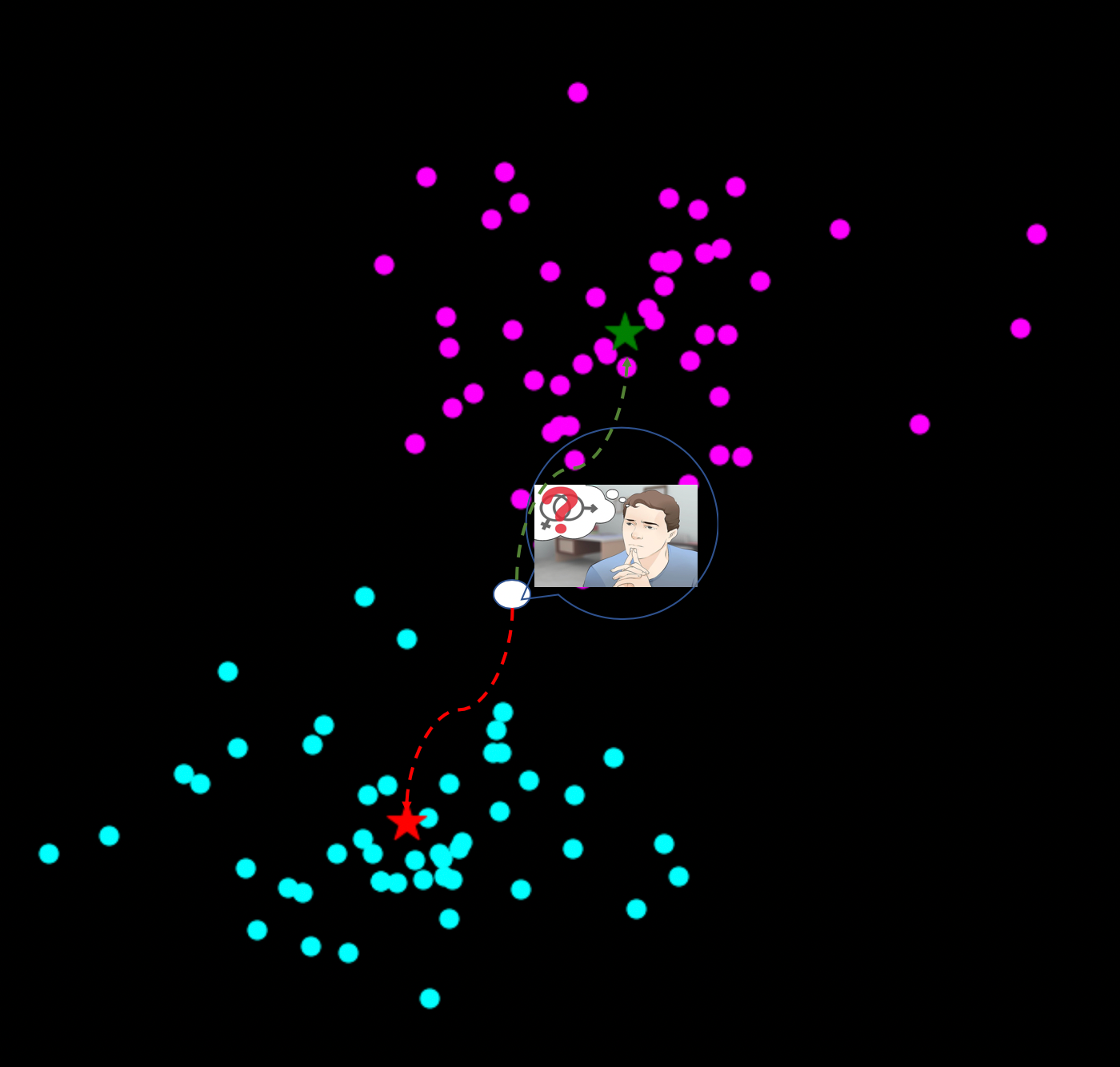

- Given kmeans iterative nature and the random initialization of centroids at the start of the algorithm, different initializations may lead to dissimilar clusters since kmeans algorithm may stuck in a local optimum and may non converge to global optimum. Therefore, it's recommended to run the algorithm using different initializations of centroids and pick the results of the run that that yielded the lower sum of squared distance.

- Assignment of examples isn't changing is the same thing equally no alter in within-cluster variation:

Implementation

We'll apply simple implementation of kmeans here to merely illustrate some concepts. And then nosotros volition use sklearn implementation that is more efficient take care of many things for united states of america.

Applications

kmeans algorithm is very popular and used in a variety of applications such as market partitioning, document clustering, paradigm division and epitome pinch, etc. The goal ordinarily when nosotros undergo a cluster analysis is either:

- Get a meaningful intuition of the construction of the information we're dealing with.

- Cluster-then-predict where different models will be congenital for different subgroups if we believe in that location is a wide variation in the behaviors of different subgroups. An case of that is clustering patients into different subgroups and build a model for each subgroup to predict the probability of the adventure of having heart assault.

In this mail, we'll apply clustering on two cases:

- Geyser eruptions partitioning (2D dataset).

- Paradigm compression.

Kmeans on Geyser's Eruptions Sectionalization

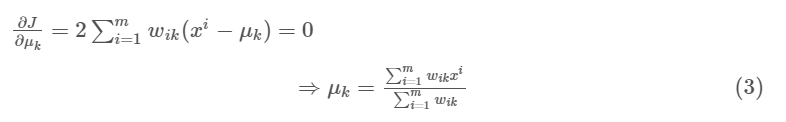

We'll outset implement the kmeans algorithm on 2D dataset and encounter how it works. The dataset has 272 observations and two features. The data covers the waiting fourth dimension between eruptions and the duration of the eruption for the Former Faithful geyser in Yellowstone National Park, Wyoming, Us. We will endeavour to observe K subgroups within the data points and group them accordingly. Beneath is the description of the features:

- eruptions (float): Eruption time in minutes.

- waiting (int): Waiting fourth dimension to next eruption.

Let'southward plot the data beginning:

We'll use this information because it's easy to plot and visually spot the clusters since its a ii-dimension dataset. It's obvious that we take 2 clusters. Let'south standardize the data kickoff and run the kmeans algorithm on the standardized data with K=ii.

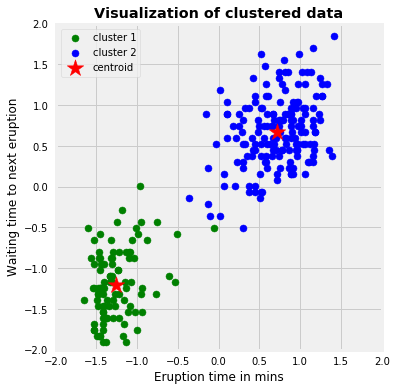

The above graph shows the scatter plot of the data colored by the cluster they belong to. In this instance, we chose K=2. The symbol '*' is the centroid of each cluster. Nosotros can recall of those 2 clusters every bit geyser had different kinds of behaviors nether different scenarios.

Next, we'll bear witness that different initializations of centroids may yield to different results. I'll apply ix dissimilar random_state to change the initialization of the centroids and plot the results. The title of each plot volition be the sum of squared distance of each initialization.

As a side note, this dataset is considered very easy and converges in less than 10 iterations. Therefore, to see the effect of random initialization on convergence, I am going to become with iii iterations to illustrate the concept. All the same, in real world applications, datasets are not at all that clean and dainty!

Every bit the graph above shows that we but ended up with two different ways of clusterings based on different initializations. We would option the one with the lowest sum of squared distance.

Kmeans on Image Compression

In this office, we'll implement kmeans to compress an image. The prototype that we'll be working on is 396 x 396 x three. Therefore, for each pixel location we would take 3 8-chip integers that specify the scarlet, greenish, and blue intensity values. Our goal is to reduce the number of colors to 30 and represent (compress) the photo using those thirty colors simply. To option which colors to use, we'll employ kmeans algorithm on the paradigm and treat every pixel as a data point. That means reshape the image from height x width x channels to (pinnacle * width) x channel, i,due east we would have 396 x 396 = 156,816 information points in 3-dimensional space which are the intensity of RGB. Doing and then will permit us to stand for the prototype using the 30 centroids for each pixel and would significantly reduce the size of the image past a gene of 6. The original epitome size was 396 x 396 x 24 = 3,763,584 bits; however, the new compressed prototype would be 30 x 24 + 396 x 396 10 4 = 627,984 bits. The huge deviation comes from the fact that we'll be using centroids every bit a lookup for pixels' colors and that would reduce the size of each pixel location to 4-flake instead of 8-scrap.

From now on we volition be using sklearn implementation of kmeans. Few thing to note here:

-

n_initis the number of times of running the kmeans with unlike centroid'southward initialization. The result of the all-time 1 will be reported. -

tolis the within-cluster variation metric used to declare convergence. - The default of

initis k-ways++ which is supposed to yield a improve results than just random initialization of centroids.

We can see the comparing between the original image and the compressed ane. The compressed epitome looks close to the original one which means we're able to retain the majority of the characteristics of the original paradigm. With smaller number of clusters we would have higher compression rate at the expense of epitome quality. Every bit a side note, this image compression method is called lossy data compression because we can't reconstruct the original image from the compressed image.

Evaluation Methods

Contrary to supervised learning where we have the ground truth to evaluate the model's operation, clustering assay doesn't have a solid evaluation metric that we can use to evaluate the outcome of different clustering algorithms. Moreover, since kmeans requires k as an input and doesn't learn information technology from data, in that location is no correct answer in terms of the number of clusters that we should have in any problem. Sometimes domain noesis and intuition may assistance but usually that is not the case. In the cluster-predict methodology, we can evaluate how well the models are performing based on different K clusters since clusters are used in the downstream modeling.

In this post nosotros'll cover two metrics that may give us some intuition nigh thou:

- Elbow method

- Silhouette assay

Elbow Method

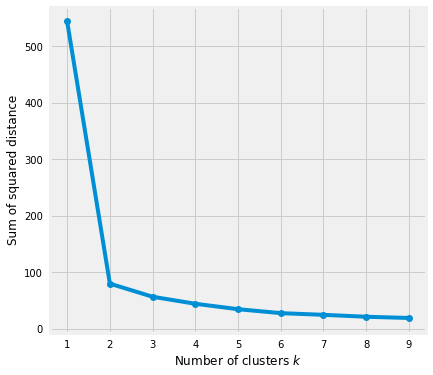

Elbow method gives us an idea on what a good yard number of clusters would be based on the sum of squared distance (SSE) between data points and their assigned clusters' centroids. We selection m at the spot where SSE starts to flatten out and forming an elbow. Nosotros'll use the geyser dataset and evaluate SSE for dissimilar values of k and run into where the curve might course an elbow and flatten out.

The graph above shows that k=2 is not a bad option. Sometimes it's nevertheless difficult to figure out a skillful number of clusters to use considering the curve is monotonically decreasing and may non show whatsoever elbow or has an obvious indicate where the curve starts flattening out.

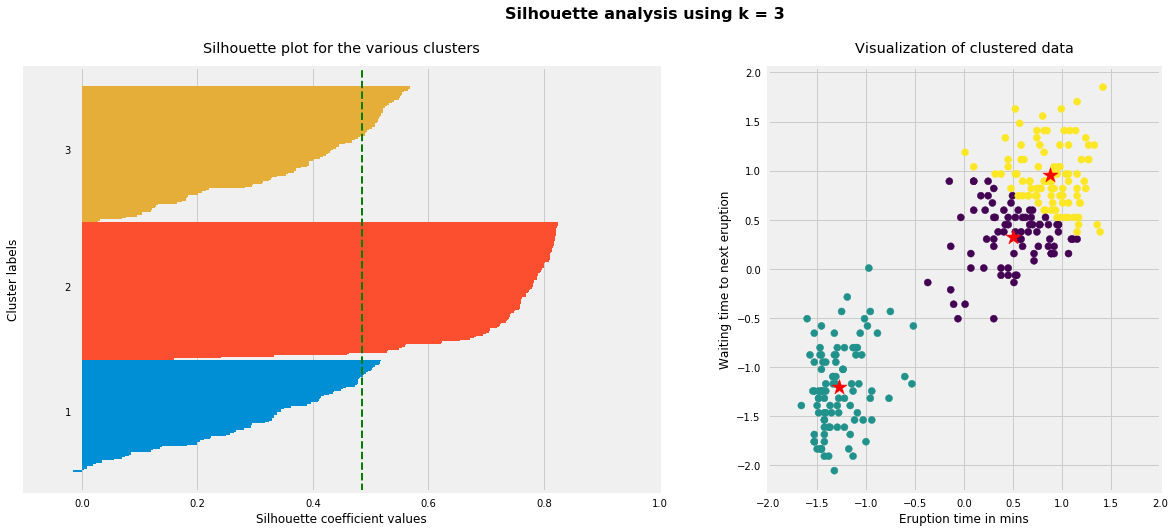

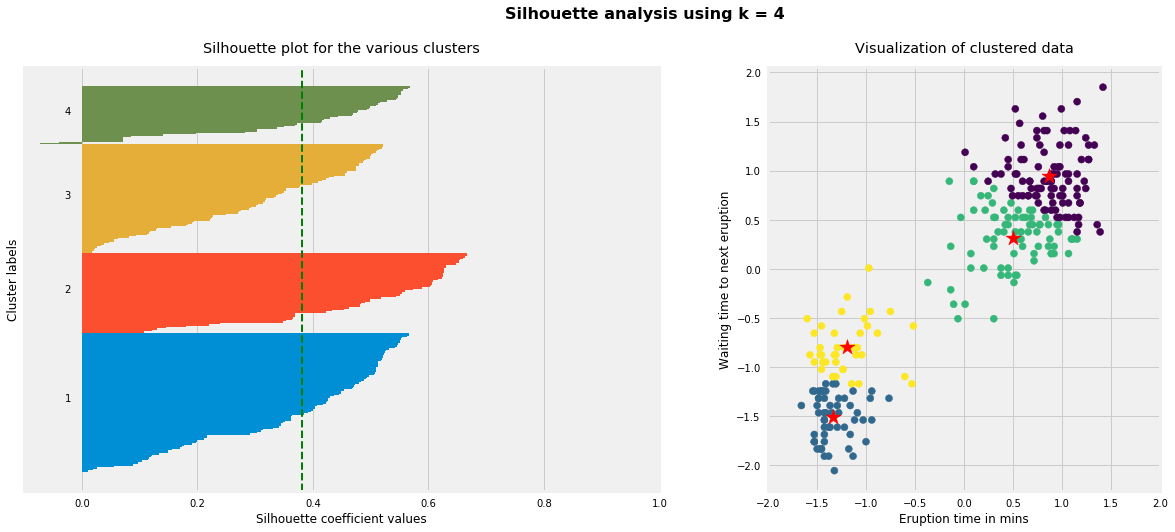

Silhouette Analysis

Silhouette analysis can be used to decide the degree of separation betwixt clusters. For each sample:

- Compute the average distance from all information points in the aforementioned cluster (ai).

- Compute the average distance from all information points in the closest cluster (bi).

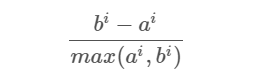

- Compute the coefficient:

The coefficient can accept values in the interval [-ane, 1].

- If it is 0 –> the sample is very close to the neighboring clusters.

- Information technology it is one –> the sample is far abroad from the neighboring clusters.

- It it is -1 –> the sample is assigned to the wrong clusters.

Therefore, we want the coefficients to be as big as possible and close to 1 to have a good clusters. We'll utilize hither geyser dataset once again because its cheaper to run the silhouette analysis and it is actually obvious that at that place is about likely only ii groups of data points.

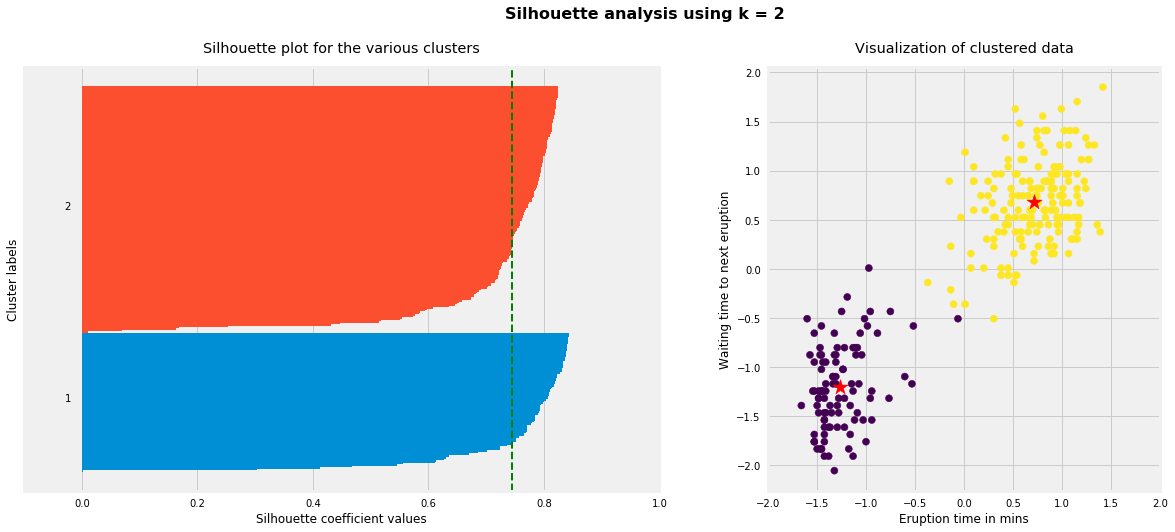

As the higher up plots prove, n_clusters=two has the all-time average silhouette score of effectually 0.75 and all clusters being higher up the average shows that information technology is actually a good choice. Too, the thickness of the silhouette plot gives an indication of how big each cluster is. The plot shows that cluster 1 has virtually double the samples than cluster two. Notwithstanding, as we increased n_clusters to 3 and iv, the average silhouette score decreased dramatically to effectually 0.48 and 0.39 respectively. Moreover, the thickness of silhouette plot started showing wide fluctuations. The bottom line is: Skilful n_clusters will accept a well in a higher place 0.5 silhouette boilerplate score as well equally all of the clusters accept higher than the average score.

Drawbacks

Kmeans algorithm is good in capturing structure of the information if clusters have a spherical-similar shape. It always try to construct a dainty spherical shape effectually the centroid. That ways, the infinitesimal the clusters take a complicated geometric shapes, kmeans does a poor job in clustering the data. We'll illustrate three cases where kmeans volition not perform well.

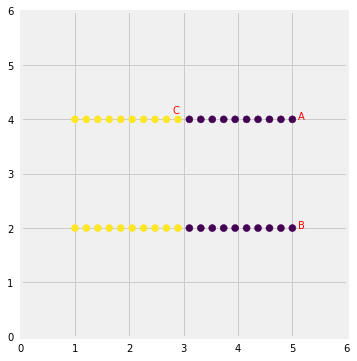

Starting time, kmeans algorithm doesn't permit data points that are far-abroad from each other share the aforementioned cluster even though they obviously belong to the same cluster. Below is an example of data points on two unlike horizontal lines that illustrates how kmeans tries to group half of the data points of each horizontal lines together.

Kmeans considers the point 'B' closer to point 'A' than bespeak 'C' since they have non-spherical shape. Therefore, points 'A' and 'B' will exist in the same cluster but betoken 'C' volition be in a unlike cluster. Note the Single Linkage hierarchical clustering method gets this right considering information technology doesn't carve up like points).

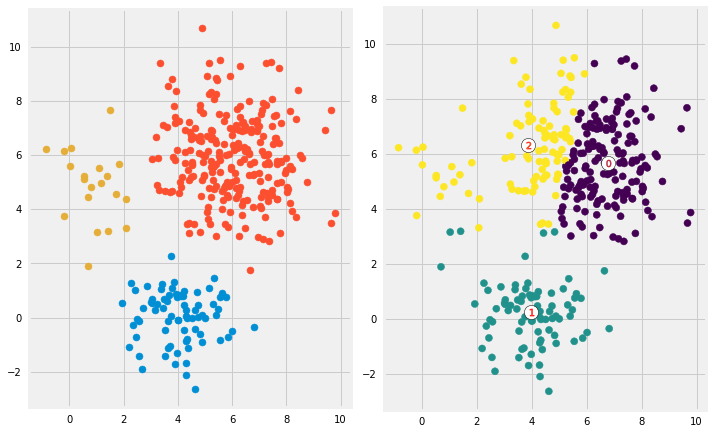

Second, we'll generate information from multivariate normal distributions with different means and standard deviations. And then we would have three groups of data where each group was generated from dissimilar multivariate normal distribution (unlike mean/standard deviation). One group volition take a lot more data points than the other ii combined. Adjacent, we'll run kmeans on the information with K=3 and see if it volition exist able to cluster the data correctly. To brand the comparison easier, I am going to plot beginning the data colored based on the distribution it came from. Then I will plot the same data but now colored based on the clusters they have been assigned to.

Looks similar kmeans couldn't figure out the clusters correctly. Since information technology tries to minimize the inside-cluster variation, information technology gives more than weight to bigger clusters than smaller ones. In other words, information points in smaller clusters may be left away from the centroid in order to focus more on the larger cluster.

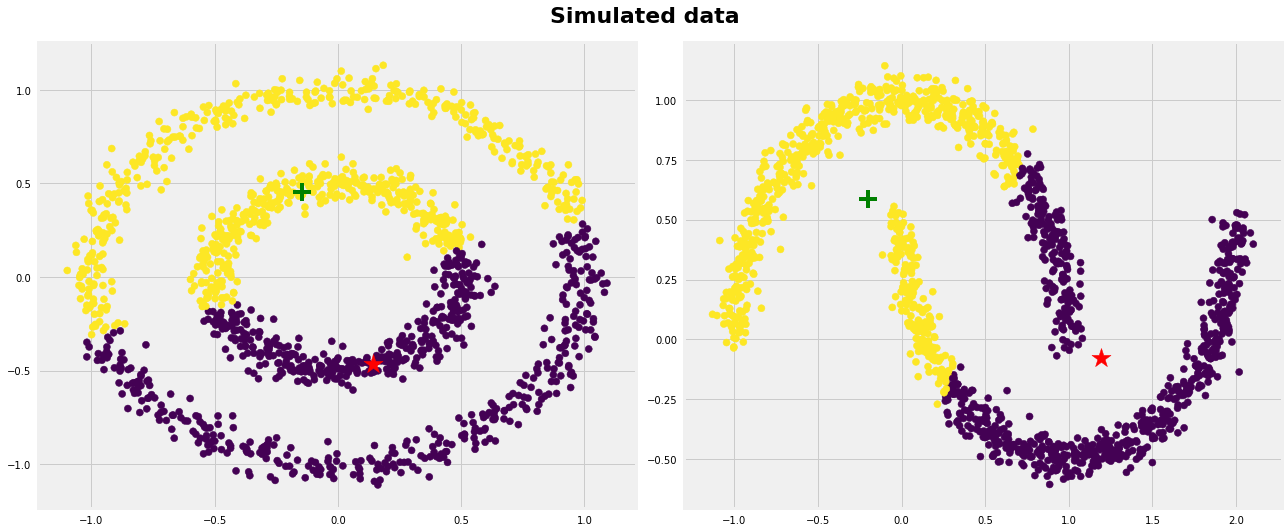

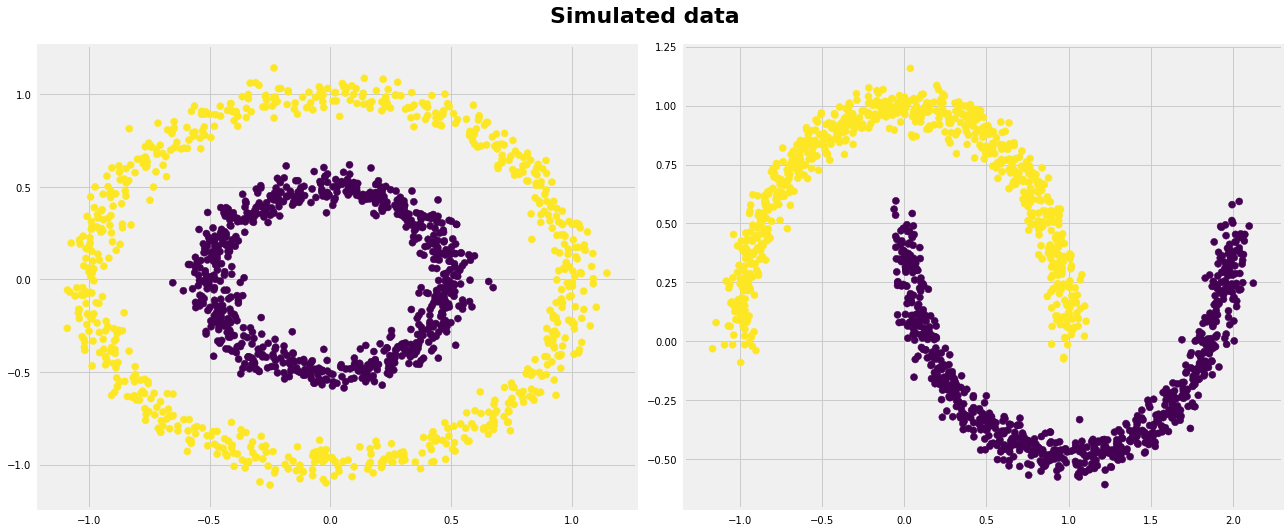

Last, nosotros'll generate information that have complicated geometric shapes such every bit moons and circles within each other and examination kmeans on both of the datasets.

Equally expected, kmeans couldn't effigy out the correct clusters for both datasets. However, we tin assist kmeans perfectly cluster these kind of datasets if we utilise kernel methods. The thought is nosotros transform to higher dimensional representation that make the information linearly separable (the same idea that we use in SVMs). Dissimilar kinds of algorithms work very well in such scenarios such as SpectralClustering, come across below:

Conclusion

Kmeans clustering is ane of the most popular clustering algorithms and normally the first affair practitioners apply when solving clustering tasks to get an thought of the structure of the dataset. The goal of kmeans is to group information points into singled-out non-overlapping subgroups. It does a very skilful task when the clusters have a kind of spherical shapes. However, it suffers as the geometric shapes of clusters deviates from spherical shapes. Moreover, it also doesn't acquire the number of clusters from the data and requires it to be pre-defined. To be a skillful practitioner, it's skillful to know the assumptions behind algorithms/methods so that you lot would have a pretty good idea virtually the forcefulness and weakness of each method. This will aid you determine when to use each method and under what circumstances. In this post, we covered both forcefulness, weaknesses, and some evaluation methods related to kmeans.

Below are the chief takeaways:

- Scale/standardize the data when applying kmeans algorithm.

- Elbow method in selecting number of clusters doesn't unremarkably work considering the error office is monotonically decreasing for all yards.

- Kmeans gives more weight to the bigger clusters.

- Kmeans assumes spherical shapes of clusters (with radius equal to the altitude betwixt the centroid and the furthest data point) and doesn't piece of work well when clusters are in unlike shapes such as elliptical clusters.

- If at that place is overlapping between clusters, kmeans doesn't have an intrinsic measure for uncertainty for the examples belong to the overlapping region in lodge to determine for which cluster to assign each information point.

- Kmeans may even so cluster the data fifty-fifty if information technology can't be amassed such as data that comes from uniform distributions.

The notebook that created this post can exist institute here.

Originally published at imaddabbura.github.io on September 17, 2018.

Source: https://towardsdatascience.com/k-means-clustering-algorithm-applications-evaluation-methods-and-drawbacks-aa03e644b48a

Postar um comentário for "Call to Run the Algorhythm Again"